proof that progress matters

AI session summaries

New UX

TL;DR

I led design for AI Session Summaries, a feature that helped students & parents understand what was achieved in each tutoring session. By introducing low-fidelity, evidence-based design practices and coaching a junior designer through strategic execution, we improved session recap engagement by +47.7%, boosted second-session bookings by +15.2%, and increased retention by +8.79 percentage points, a $10M annual revenue opportunity tied to student success and parent trust.

Customer opportunity

Parents needed better visibility into their child’s learning progress. Only 8–12% of customers engaged with Session Recaps in the first week, yet those who did were 43% more likely to book a second session. Parents frequently requested easier access to progress tracking and more personalized summaries of what their student accomplished. Students, meanwhile, wanted continuity and practice between sessions.

Business opportunity

Improving awareness and usability of Session Summaries had direct ties to Varsity Tutors’ retention North Star metrics: “2 in 14” (two sessions within two weeks) and 37-day survival rate. Increasing recap engagement by just 4% could translate into 2–3% more students staying active after one month, representing millions in annual revenue.

Solution

We redesigned the Session Summaries experience to make learning progress tangible and trustworthy:

AI-generated summaries captured session highlights, progress, and next steps automatically.

Multi-session summaries and progress tracking encouraged families to follow learning journeys over time.

Contextual entry points (after class, via email, or dashboard) improved visibility and reduced friction.

Visual differentiation made recaps feel personalized and engaging rather than transactional.

My role

As Design Manager & Player-Coach, I led strategy and hands-on design while managing a junior designer:

Partnered with PMs and engineers to prioritize retention outcomes over feature output.

Introduced fidelity progression frameworks (Word Docs → low-fi AI prototypes→ hi-fi) to cut wasteful iteration cycles.

Ran journey-mapping workshops to align teams around user goals instead of page features.

Advocated for evidence-based validation, translating intuition into measurable hypotheses and A/B tests.

Modeled cross-functional collaboration by co-sketching, sharing early work, and reinforcing trust through contribution.

Results & Impact

+8.79 p.p. increase in retention (“2 in 14” rate: 65% → 73%+)

+47.7% increase in video recap views

+15.2% increase in second-session bookings

99% positive AI summary ratings

Established Process Playbook principles—now standard practice for the design org

Strategic takeaways

Start with Evidence, Not Aesthetics: Data builds confidence in bold design moves.

Use Fidelity to Build Strategic Thinking: Match design fidelity to idea maturity.

Design for Journeys, Not Pages: Retention happens across moments, not screens.

Coach Through Doing, Not Telling: Modeling collaboration accelerates designer growth.

Please reach out if you’d like to see a more comprehensive version of this case study.

Previous UX

Lo-fi artifacts throughout the process

Thinking through format and function to meet user needs

More case studies

-

Varsity Tutors Design System

Audit-to-implementation workflow showing the new Varsity Tutors design system foundations.

-

Agentic practice problems and UI generation

Defined the design framework and prompting architecture that enabled agents to turn tutoring transcripts into personalized practice content and UI within seconds.

-

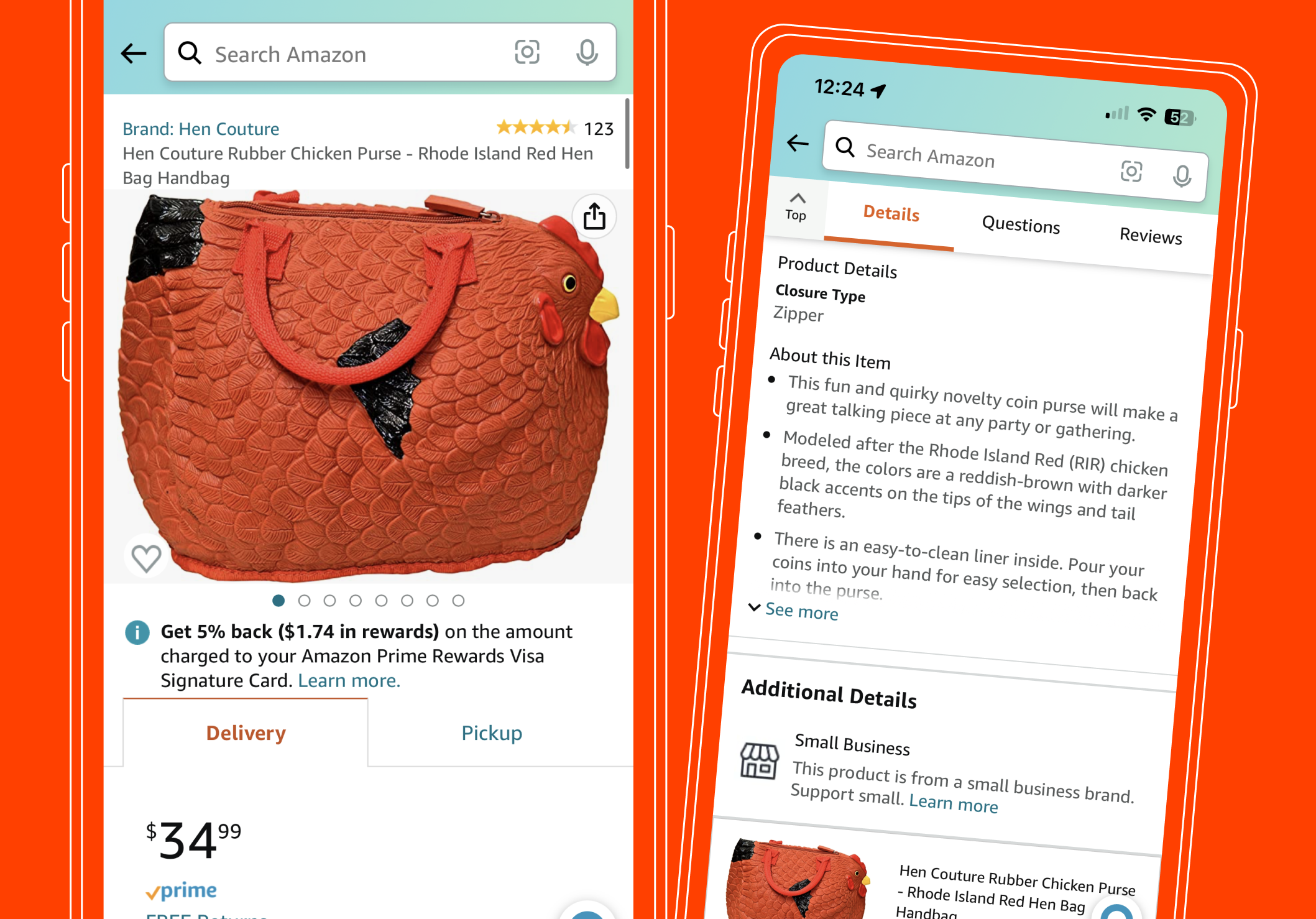

Enhanced visual product info on Amazon’s detail page

Redesigned the Amazon detail page to bring visuals to the forefront, introducing immersive vertical reels and layered product details. Experiments drove $282M in revenue and +9.1M units sold