Personalized practice, powered by agents

Agentic practice problems and UI generation

Context

After strong engagement during live sessions, many Varsity Tutors students lost momentum between meetings. Parents wanted evidence of progress, but students were met with generic homework that didn’t reflect what they’d just learned.

I explored how we could use Anthropic’s Workbench and Claude to generate session-specific practice problems and autonomously build matching UI. This meant redefining what “post-session UX” could be, moving from static homework to an intelligent, agent-driven experience personalized by age, subject, and learning style.

Goals

Increase % of students who complete practice after a session

Lift 37-day retention and “second-session-within-14-days” rates

Test agentic personalization for scalability and educational integrity

Process

I began by mapping how session summaries, student metadata, and concept tagging could form the contextual foundation for AI-driven practice. The goal was to make practice problems feel like a natural continuation of the tutoring experience—not a disconnected task.

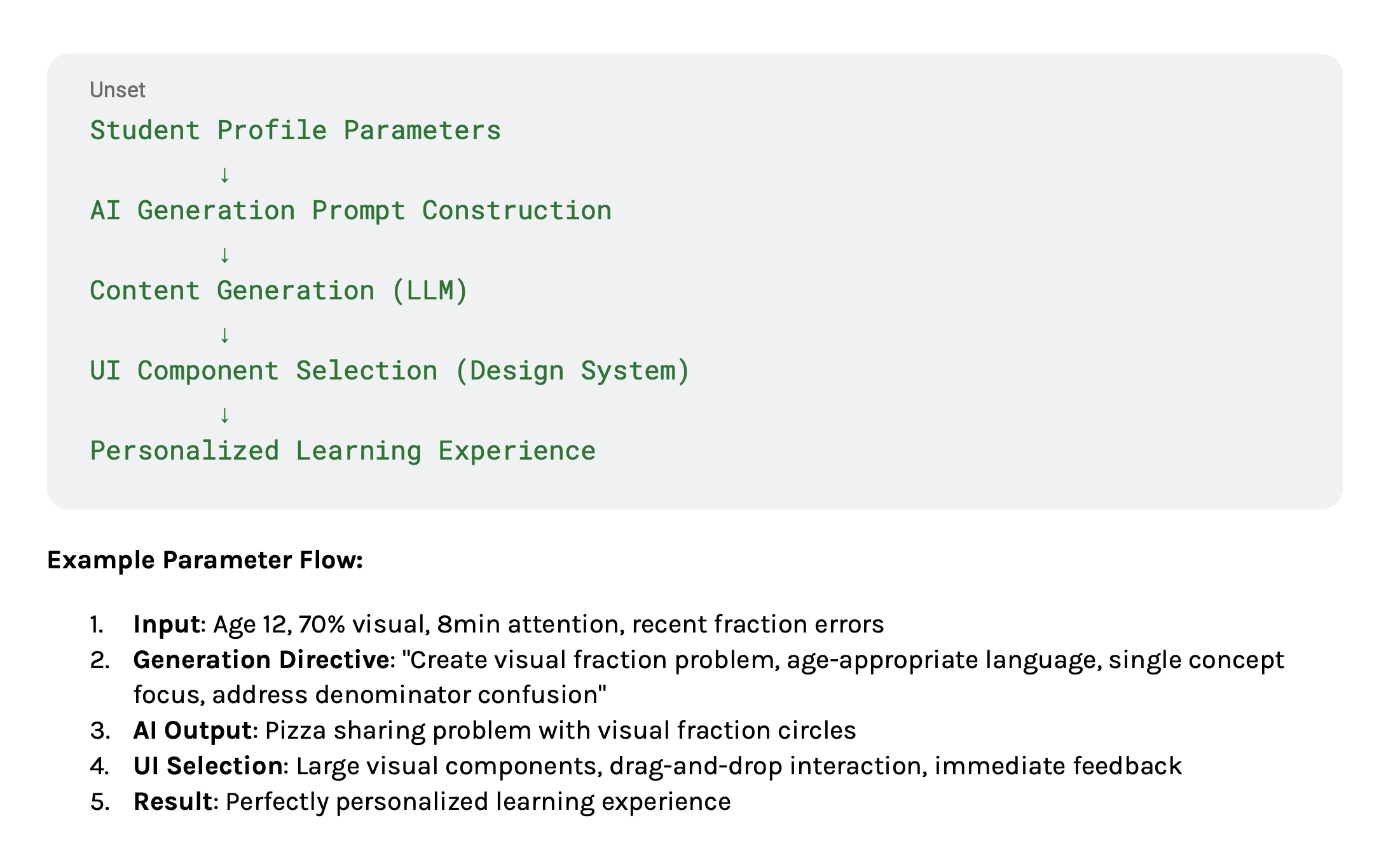

Working closely with engineering, I designed a three-prompt pipeline that powered each personalized experience:

Session Analysis Prompt – Extracts learning insights from the transcript

Content Generation Prompt – Creates targeted problems or games

UI Generation Prompt – Produces a React component using Varsity Tutors’ design tokens

This “analysis → content → UI” chain ensured every experience was personalized yet visually consistent.

Using Anthropic Workbench, I created structured prompts that turned student data into both learning parameters and design constraints.

To balance AI autonomy with UX quality, I led the creation of design-token guardrails that constrained color, spacing, and typography. We explored three approaches for how much design control to give the agent:

Branded Templates — Pre-built layouts for each learning modality

Trade-off: Stable and fast to implement, but can feel repetitive

Design System + AI Layouts — Agent composes layouts within established design-token rules

Trade-off: Balanced personalization while maintaining visual and brand consistency

Full AI Generation — Agent freely generates layout and style

Trade-off: Highly engaging, but risks inconsistency and accessibility issues

We launched with Option 2, allowing personalized presentation while preserving brand integrity and accessibility standards.

My role

Defined the agentic UX strategy and the cross-functional roadmap

Partnered with engineers to build the prompt architecture and Workbench flow

Created the UI library and token rules that guided AI-generated layouts

Facilitated design reviews and prompt experiments with PMs

Presented the end-to-end prototype to leadership using v0

This project combined hands-on design with organizational alignment—illustrating how AI can extend, not replace, the designer’s role.

Outcomes

Delivered the first working prototype linking tutoring data → AI-generated content → usable UI

Established the prompt-chain and design-system constraint model

Case studies

-

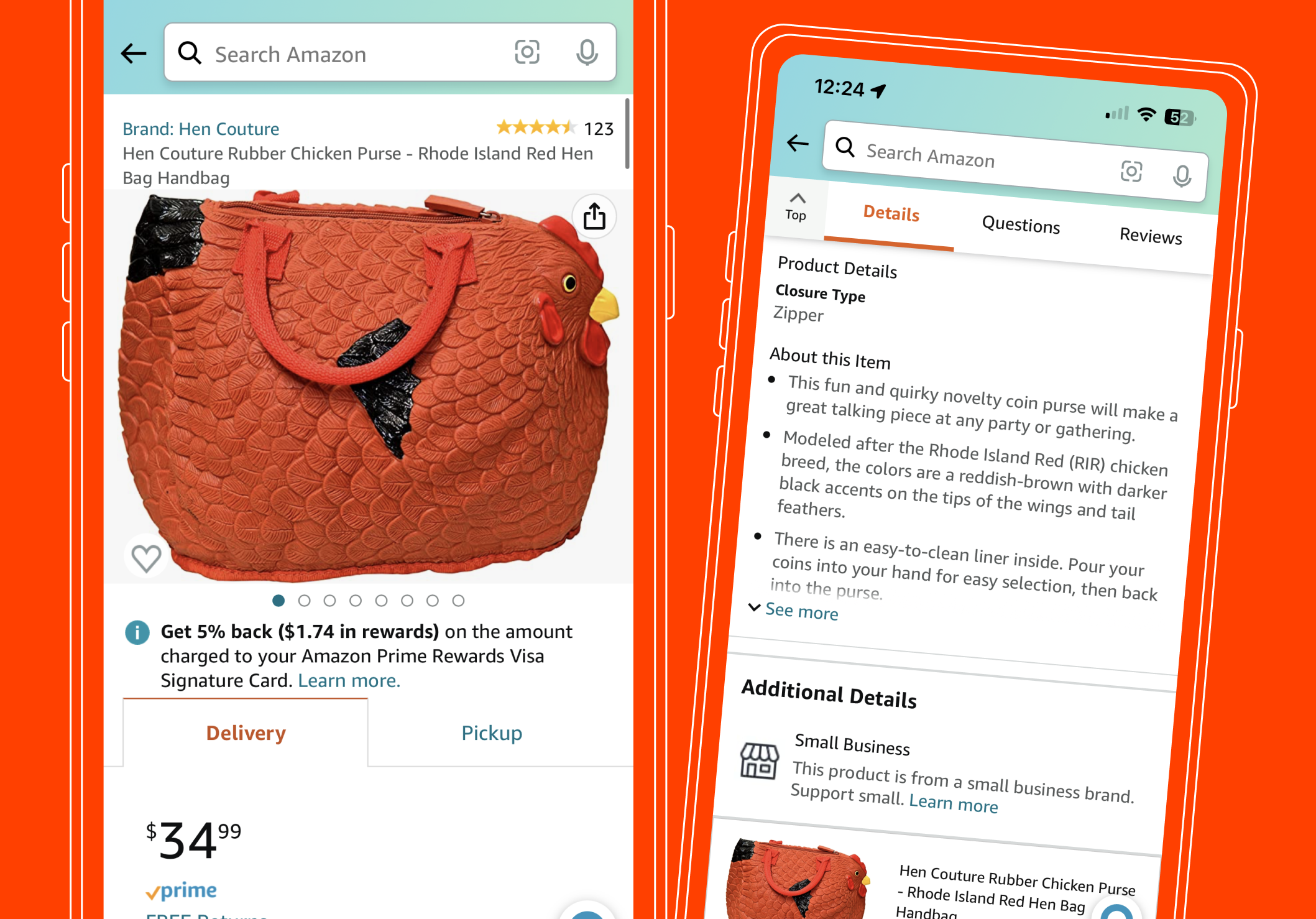

Enhanced visual product info on Amazon’s detail page

Redesigned the Amazon detail page to bring visuals to the forefront, introducing immersive vertical reels and layered product details. Experiments drove $282M in revenue and +9.1M units sold

-

Lightning deal visibility

Addressed customer frustration with missed Prime Day deals by improving deal timer visibility. The fix drove $167M in annualized revenue and earned an Amazon Empty Desk Award nomination.

-

Amazon Deals overhaul

Created a dynamic badging system to unify deal displays across the site. The redesign improved trust, clarity, and engagement, driving $119.5M in incremental revenue